AI Mortality Predictor Enhances End-of-Life Care and Palliative Support

In a prognostic study, an artificial intelligence model significantly outperformed oncologists in predicting short-term mortality, resulting in a 60% positive predictive value vs 34.8%, which involved 57 physicians and 17 advanced practice clinicians.

A 90-Day mortality model uses machine learning to identify patients with cancer who are at high risk of near-term mortality. Implementation of the model allows for conversations regarding goals of care to be initiated between clinicians and patients to discuss and carry out end-of-life preferences.1

In a prognostic study, “the artificial intelligence [AI] model significantly outperformed oncologists” in predicting short-term mortality, resulting in a 60% positive predictive value vs 34.8%, which involved 57 physicians and 17 advanced practice clinicians.1 The model developed by City of Hope achieved an accuracy score of 81.2% (95% CI, 79.1%-83.3%) and was trained with health record data spanning from April 24, 2019, through January 1, 2023.

“We need to do better with effectively balancing the best we have to offer in medical care and scientific advancements with the intentional provision of adequate time for patients and their families to reflect, decide, and ensure that every aspect of the care we offer is value based, especially during critical moments,” Finly Zachariah, MD, said in an interview with Targeted Therapies in Oncology. Zachariah is an expert in palliative medicine and is an associate clinical professor in the Department of Supportive Care Medicine at City of Hope, a comprehensive cancer center in Duarte, California. He is also cofounder and chief medical officer of Empower Hope and co-first author of the 90-day mortality paper along with Lorenzo A. Rossi, PhD.

Studies show that clinician and patient predictions for near-term mortality are generally inaccurate, being overestimated and overly optimistic.2 Another reason for implementing this model, Zachariah explained, is that clinicians can often become so focused in pursuing the next therapy or cure that they may overlook the chance to address the potential for near-term mortality.

The 90-day mortality model helps to create the opportunity for the clinician to step back and consider supportive care medicine by addressing quality-of-life indicators. Patients may be in favor of pursuing advanced therapies, Zachariah continued, but at the same time experience life’s brevity and desire to be at home with their families and not in the intensive care unit connected to machines. The latter can cause posttraumatic stress disorder for the families they leave behind, increasing the significance for those patient preferences to be stated and honored. “This model helps to confirm that the last few weeks or months are most aligned to the patient’s palliative care preferences and that insight is provided to the oncologist to have those relevant conversations,” Zachariah said.

Other Centers’ Data

In comparison, a 180-day mortality model published by Penn Medicine investigators in JAMA Oncology showed good discrimination and a 45.2% positive predictive value.3,4 When performance status and comorbidity-based classifiers were added, the model favorably reclassified patients.3 Study researchers also discovered that reminders triggered by machine learning greatly reduced the use of intense chemotherapy and other strong treatments toward the end of life. Past studies indicate that such treatments are linked to lower quality of life and adverse events, sometimes causing avoidable hospital stays in the final days.4,5

Workflow Implementation

Technology platforms typically offer a system that, in many cases, require the user to go outside of their daily workflow to access the information, Zachariah explained. “This can lead to very low adoption rates because clinicians are incredibly busy. They want to do the right thing and are open to doing the right thing, but if the program makes it harder for them, then it becomes a challenge,” he said. The team designed the program to provide this information to clinicians in a way that is thoughtful with regard to their workflow, asking questions such as, “Where would we normally go in the chart for this type of information?” and “How do we strategically place this information so it can be utilized?”

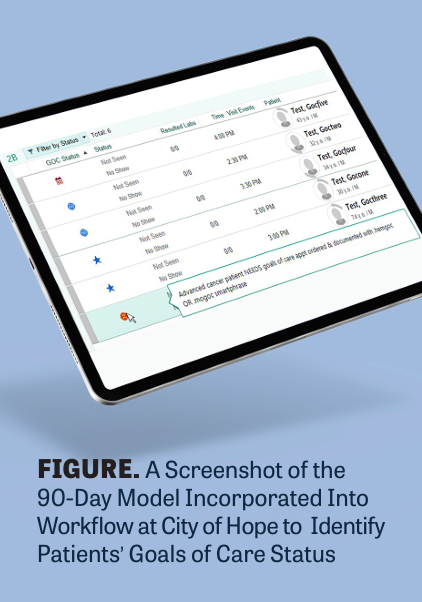

The team created a custom column called “Goals of Care Status.” This column was made available for both outpatient and inpatient charts and ensured transparency for clinicians, making it easy to identify which patients needed conversations regarding their goals of care and which patients already had them (FIGURE).

If a patient is identified by the AI model while hospitalized, the system selectively generates an interruptive alert within the clinician’s workflow, Zachariah explained. This alert informs the clinician of the top 6 factors influencing the model’s prediction for that specific patient, allowing the clinician to review and confirm whether they agree with the model’s assessment.

Within the alert are a list of potential actions: reminder of note templates for goals of care, advance care planning billing codes, and orders to enlist the assistance of palliative care/supportive care or social work services, Zachariah explained. “We then supplemented this strategy with email alerts that contain a list of the upcoming patient appointments that identifies who may benefit from these conversations,” he said. If one has partially taken place, a more extensive conversation may be suggested.

The system also allows clinicians to accurately bill for these services. Prior to the system, Zachariah recounted, hematology and medical oncology clinicians were having the necessary conversations with their patients, “but they weren’t using the codes to bill for the service.” Traditionally, only 1 specialist in a particular field can bill for the conversation, Zachariah continued. “Despite this, our oncologists and hematologists take immense pride in [caring for] their patients, feel a strong sense of ownership over their care, and often choose to participate in subsequent conversations with patients or their families after regular working hours.” The clinicians believed they couldn’t bill for these conversations because someone from their own specialty had already billed for the day. However, “through the program, we were able to inform them that they could use billing codes to credit themselves for these conversations, allowing for appropriate relative value unit collection,” Zachariah said.

Standards for Enactment

To accomplish high standards, Zachariah and a team of applied AI and data science researchers set out to ensure the “implemented AI model is transparent, appropriately vetted, and equitable by not propagating disparities,” which AI models have been known to do. “We want to be certain that we are doing our due diligence to ensure that if you’re using an AI tool, it’s a good tool,” Zachariah said. “Just because it’s AI [based] doesn’t necessarily mean that it’s good, especially in cancer. We take great pride in making sure that what we use is of high quality,” he said.

The model is trained to make decisions with a method called gradient-boosted trees, using the XGBoost library.1 This method is widely used by data scientists to achieve highly developed results on various machine learning challenges.6 In the study, the model was given collected records of 28,484 deceased and alive patients and 493 features from demographic characteristics, laboratory test results, flow sheets, and diagnoses to predict whether a patient would pass away within 90 days.1

In efforts to prevent data leakage, prediction dates that were at least 7 days before a patient’s death were chosen. Study researchers also avoided overrepresenting observations where the prediction date was within 30 days of a patient’s death. This way, the model wouldn’t have a disproportionate number of near-term deceased patients, which could introduce bias.1

The AI model adoption was catalyzed by City of Hope’s participation in the Improving Goal Concordant Care initiative, a leadership-endorsed, multidepartmental, and multi-institutional effort by leading cancer centers around the country to improve the delivery of care to align with a patient’s values and unique priorities. Currently, Zachariah and the team of researchers are working to ensure that the model works with City of Hope’s patient population and institution characteristics, “but we’re [also] partnering with other cancer centers to see [whether] it will work for their populations as well,” Zachariah said. Implementation of the model in other interested facilities will either utilize the same model or learn from it to develop other tools better accustomed to the center’s characteristics, Zachariah explained.

Since acquiring Cancer Treatment Centers of America, he continued, “we now have a community oncology presence within City of Hope and are testing the model to confirm that it works not only in an academic center and the surrounding clinics but also in the community oncology setting.” The model is being evaluated in community oncology settings outside of California, including City of Hope’s new locations in Arizona, Georgia, and Illinois, that have different demographic populations, Zachariah explained. “We made it effectively easier [for clinicians] to do the right thing, and [we] leveraged the information to share with leadership so that they understood what was happening [in the field]. This allowed the leaders to provide support to the clinicians,” he said.

REFERENCES:

1. Zachariah FJ, Rossi LA, Roberts LM, Bosserman LD. Prospective comparison of medical oncologists and a machine learning model to predict 3-month mortality in patients with metastatic solid tumors. JAMA Netw Open. 2022;5(5):e2214514. doi:10.1001/jamanetworkopen.2022.14514

2. Christakis NA, Lamont EB. Extent and determinants of error in doctors’ prognoses in terminally ill patients: prospective cohort study. BMJ. 2000;320(7233):469-472. doi:10.1136/bmj.320.7233.469

3. Manz CR, Chen J, Liu MQ, et al. Validation of a machine learning algorithm to predict 180-day mortality for outpatients with cancer. JAMA Oncol. 2020;6(11):1723-1730. doi:10.1001/jamaoncol.2020.4331

4. Machine learning-triggered reminders improve end-of-life care for patients with cancer. Penn Medicine News. January 12, 2023. Accessed April 23, 2024. https://tinyurl.com/24555jmf

5. Prigerson HG, Bao Y, Shah MA, et al. Chemotherapy use, performance status, and quality of life at the end of life. JAMA Oncol. 2015;1(6):778-784. doi:10.1001/jamaoncol.2015.2378

6. Chen T, Guestrin C. Xgboost: a scalable tree boosting system. arXiv. Preprint posted online June 10, 2016. 10.48550/arXiv.1603.02754

Survivorship Care Promotes Evidence-Based Approaches for Quality of Life and Beyond

March 21st 2025Frank J. Penedo, PhD, explains the challenges of survivorship care for patients with cancer and how he implements programs to support patients’ emotional, physical, and practical needs.

Read More