New AI Tool Offers Detailed Cancer Prognosis and Recurrence Predictions

In an interview with Targeted Oncology, Aristotelis Tsirigos, PhD, discussed a study evaluating a self-taught artificial intelligence tool that can accurately diagnose cases of adenocarcinoma.

Aristotelis Tsirigos, PhD

Researchers at NYU Langone Health's Perlmutter Cancer Center and the University of Glasgow developed a self-taught artificial intelligence (AI) tool for diagnosing adenocarcinoma.1 According to Aristotelis Tsirigos, PhD, the tool accurately distinguished between lung adenocarcinoma and squamous cell cancers 99% of the time and predicted cancer recurrence with 72% accuracy, outperforming pathologists' 64% accuracy.

Lung adenocarcinoma tissue slides from the Cancer Genome Atlas were analyzed. A total of 46 key characteristics were identified, linking some to cancer recurrence and survival.

Researchers aim to use the AI tool to predict survival and recurrence for up to 5 years and develop similar tools for other cancers. Additionally, they plan to integrate more data to help improve accuracy and make the tool publicly available once further testing is done.

In an interview with Targeted OncologyTM, Tsirigos, study co-senior investigator, professor in the Departments of Pathology and Medicine at NYU Grossman School of Medicine and Perlmutter Cancer Center, and co-director of precision medicine and director of its Applied Bioinformatics Laboratories, discussed this promising self-taught AI tool.

Targeted Oncology: Can you discuss this AI tool and how it is used to accurately diagnose cases of adenocarcinoma?

Tsirigos: This is one of the AI tools that you may have heard about. The difference with this tool is that it is self-taught. That is important because typically, when you train a machine learning model, you need to know what the diagnosis is in the medical space. But that requires quite a bit of effort from the pathologist's side. Here, we decided to do it in a completely unsupervised way, which means the algorithm would have to teach itself what the important parts of the image are so it could go ahead and do the diagnostics. But this tool can be used in different contexts for different diseases. We are focused on lung cancer, but of course, it is applicable to different types of cancer.

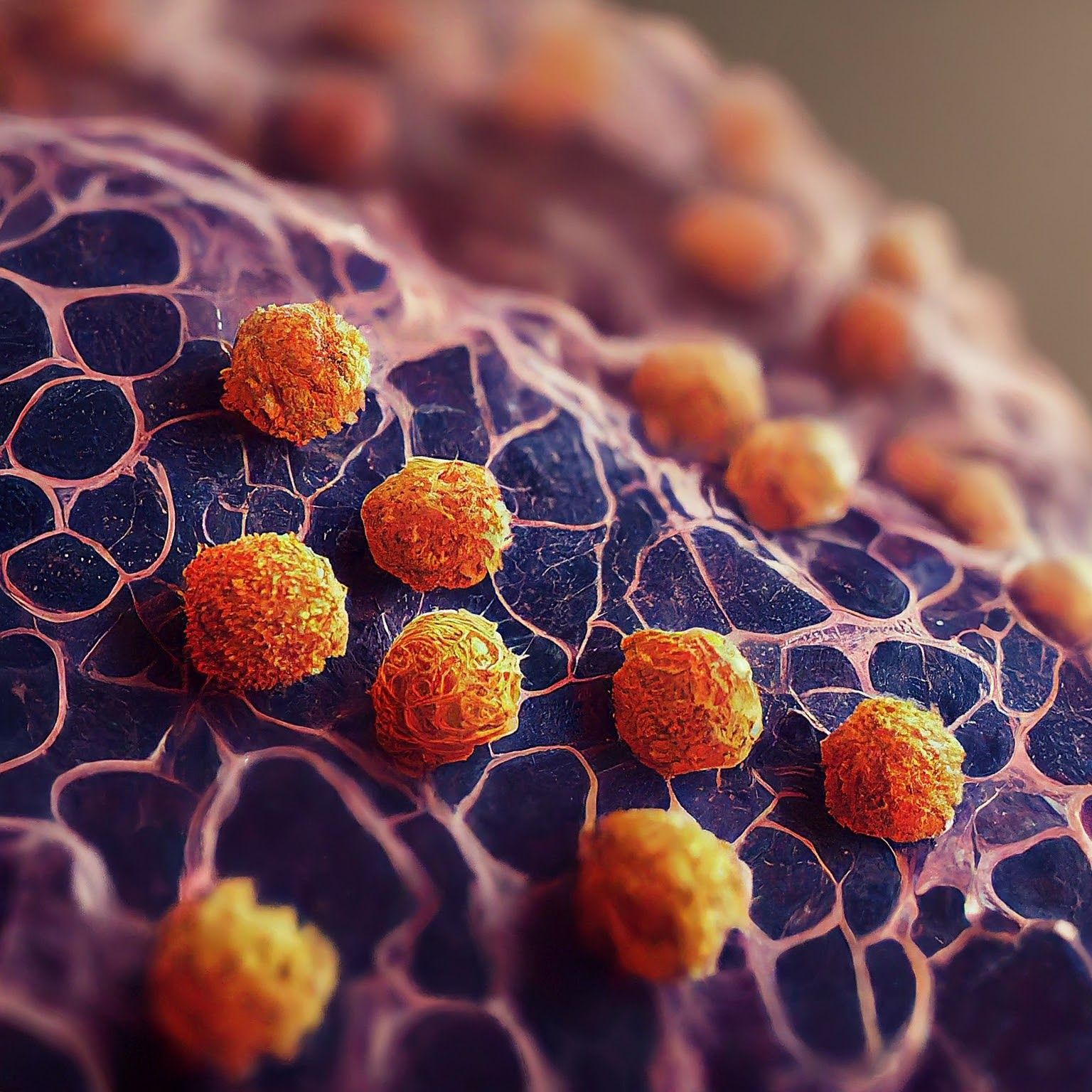

Microscopic image of non-small cell lung cancer - Generated with Google Gemini AI

Could you provide some background on the study that tested this tool?

With every machine learning algorithm, you need to train it on some data. Typically, what we do is rely on big datasets that are either internal or maybe they are published already and available to the public. We did the latter in this case. We relied on publicly available data from different studies, and we trained the algorithm, which means we fed the algorithm a set of images and the algorithm spent a few days crunching the data. Then, we decided, as I mentioned before, to initially test for lung cancer. One of the tasks we asked the algorithm to do was to make a diagnosis of lung cancer cases. Typically, there is non–small cell lung cancer, there is small cell lung cancer, but we focused on non–small cell lung cancer, which is the majority of lung cancers.

We asked the algorithm to do several things. Going straight to the diagnosis, we asked the algorithm to distinguish between 2 major subtypes of lung cancer. One is adenocarcinoma, the other is squamous cell carcinoma. The second task was focusing on early-stage adenocarcinoma to try to predict whether a patient who has undergone surgery will do well or do better or will do poorly in the future, meaning we try to predict future recurrence of tumors. That is important because, as you can imagine, a patient who is high risk, it is important to monitor them more frequently, or perhaps follow up with a more aggressive therapy, whereas patients that are of lower risk, you may want to not be so aggressive.

The algorithm was 70% accurate for predicting cancer recurrence. Is this a significant improvement compared with the 64% accuracy for pathologists?

Seventy-two percent is not perfect, so there is room for improvement. But it is definitely a big improvement over the standard that is used right now. When we compared with that standard, using the same exact parameters, the same exact cohort, the same exact group of patients, there was a significant improvement. Now, you can improve this further by incorporating more data, either on the imaging side, so train the algorithm with more data, or use additional data, meaning, for example, demographic data, or the age of the patient, or perhaps the sex of the patient [at] birth, or perhaps in mutations of the tumor. There is definitely additional data that we could incorporate into the algorithm to make it better. But the point of this study was to show that just by just looking at an image, perhaps using AI, [you can] improve on what pathologists can do alone.

Are there any other notable findings?

To me, the most interesting finding and what was fascinating for all of us, a multidisciplinary team of computational scientists, doctors, pathologists, was that these algorithms, when fed enough data, can teach themselves pathology. We are focused on pathology, so given enough data, the algorithm was able to teach itself. Of course, we had to validate that it works so then experts came in and indeed, highlighted that the algorithm can correctly identify patterns that pathologists have found over decades. It took the field probably decades to reach this point. The algorithm was able, through processing a lot of data, to get to that point within days.

Are there any limitations to the types of lung cancer this AI tool can diagnose?

One main limitation comes from the size of data you use for training. As we get more data, this problem is going to go away. Now, in general with AI, if we were going to move this to clinical practice and use it on real patients, we would have to be extremely careful. It has to be tested in many different ways and probably tested continuously. The algorithm makes a diagnosis by looking at an image. If this image is slightly out of focus, is slightly blurred, or is perhaps processed by a different scanner or a different software, in other words, if the input changes slightly, how can you make sure that the algorithm is not going to be affected? With any small change or bigger change you make in that process, you have to revalidate the algorithm and confirm again that it works. Adding humans in the loop is also important because human experts overseeing the algorithm are working together with the algorithm. At this point, I will say that technology is maturing and is still not mature enough. Perhaps the way to think about this is using AI algorithms as a second opinion or checking what kind of diagnosis the pathologist made to make sure that both AI and pathologists agree. If there is a disagreement, then you can ask another expert for a third opinion.

What are the next steps? When do you expect this AI will be available for clinical use?

The next steps will be to test this algorithm, as we say, prospectively and blindly like a clinical trial. Once this is done, there are also many other steps you need to take. For example, you may have the algorithm, but you need to build a system around it, a system that protects patient information, and also a system that can be easily used by the pathologist. The fact that there is an AI algorithm out there does not mean that it is ready for production.

How else do you think this tool might be integrated into the diagnostic process?

Imagine you are a pathologist, and you run this AI tool. You get a diagnosis, and it tells you it is a lung adenocarcinoma, high-risk, and you need to monitor this patient. If you just get this information as an expert, what kind of trust can you build on these systems? Trust is very important. To build trust, which I think is a critical component, you must have interpretable AI algorithms that can at least explain why they reached a certain diagnosis. Our algorithm is built with this in mind. It tells the pathologist not only what the diagnosis or prognosis is, but it also tells the pathologist that this is a high-risk patient because it found this piece of evidence and that relates to the pathologist because it connects them back to what they know. For example, the algorithm will tell us [someone is] high-risk because of a certain pattern, and the pathologist knows that is linked to a poor prognosis or is linked to a better prognosis. Now, that empowers the pathologist to trust the algorithm more and understand why it makes a certain diagnosis or programs.